Deploying CDP Flip

This section provides detailed instructions on how to deploy CDP Flip using the Devtron in the AWS.

Prerequisites

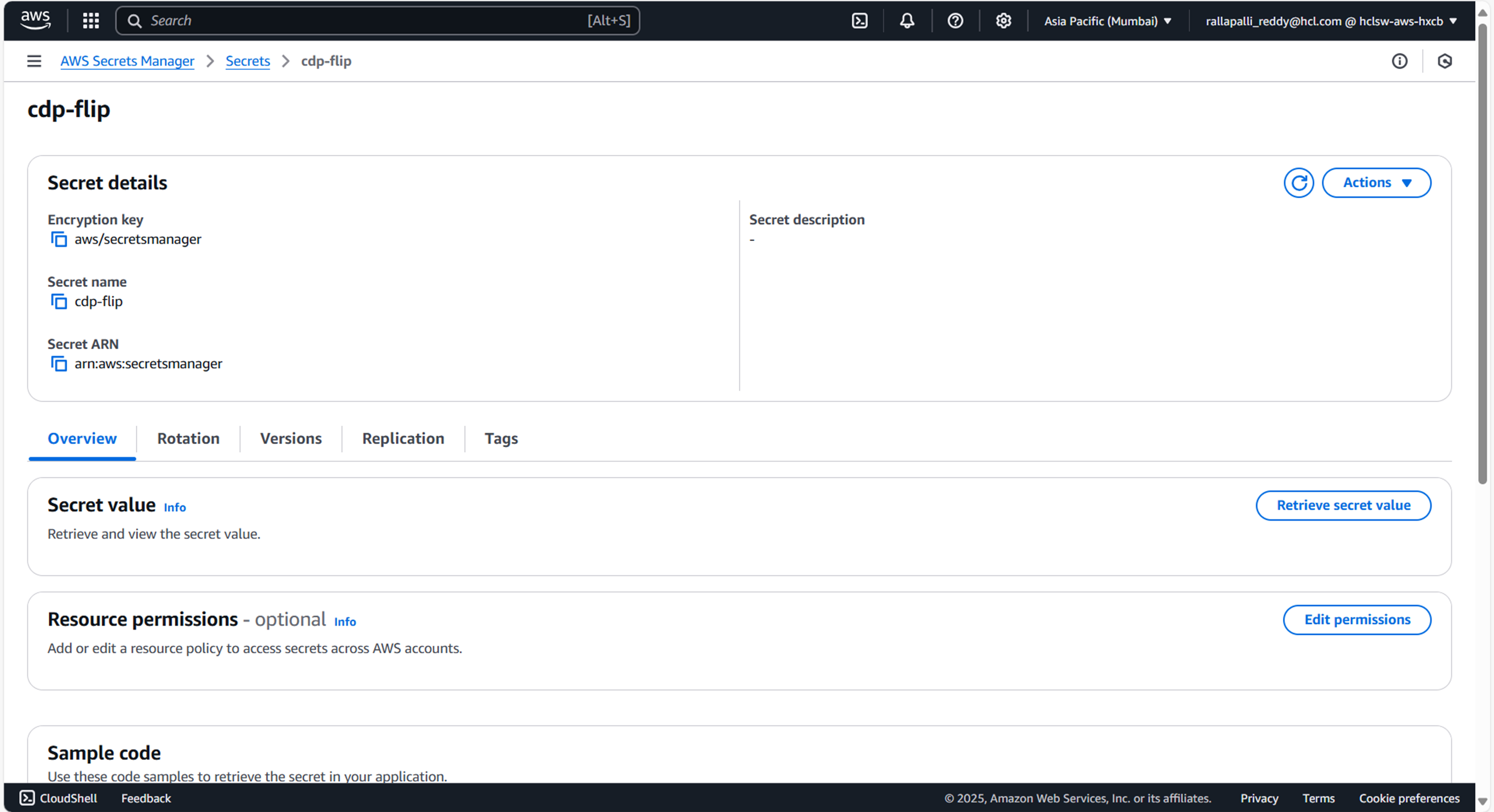

Make sure to create cdp-flip secret in the AWS Secret Manager before deploying CDP Flip.

To create CDP Flip secret in the AWS secret Manager, follow the steps below:

- Create a cdp-flip secret sample key and value, and update the actual values as shown

below.

{ "CUSTOMIZATION_AERO_CLUSTER": "", "DB_USERNAME": "", "DB_PASSWORD": "", "DB_URL": "", "KafkaConsumerConfig": "", "KAFKA_CONSUMER_PREFIX": "flip", "KAFKA_TOPIC_LIST": "dmp_rt_segments" } - Create separate service account cdp-dev-app-sa with appropriate roles and permissions, and update ConfigMaps data with actual values.

- Create required Kafka topics before deployment.

Deploy CDP Flip

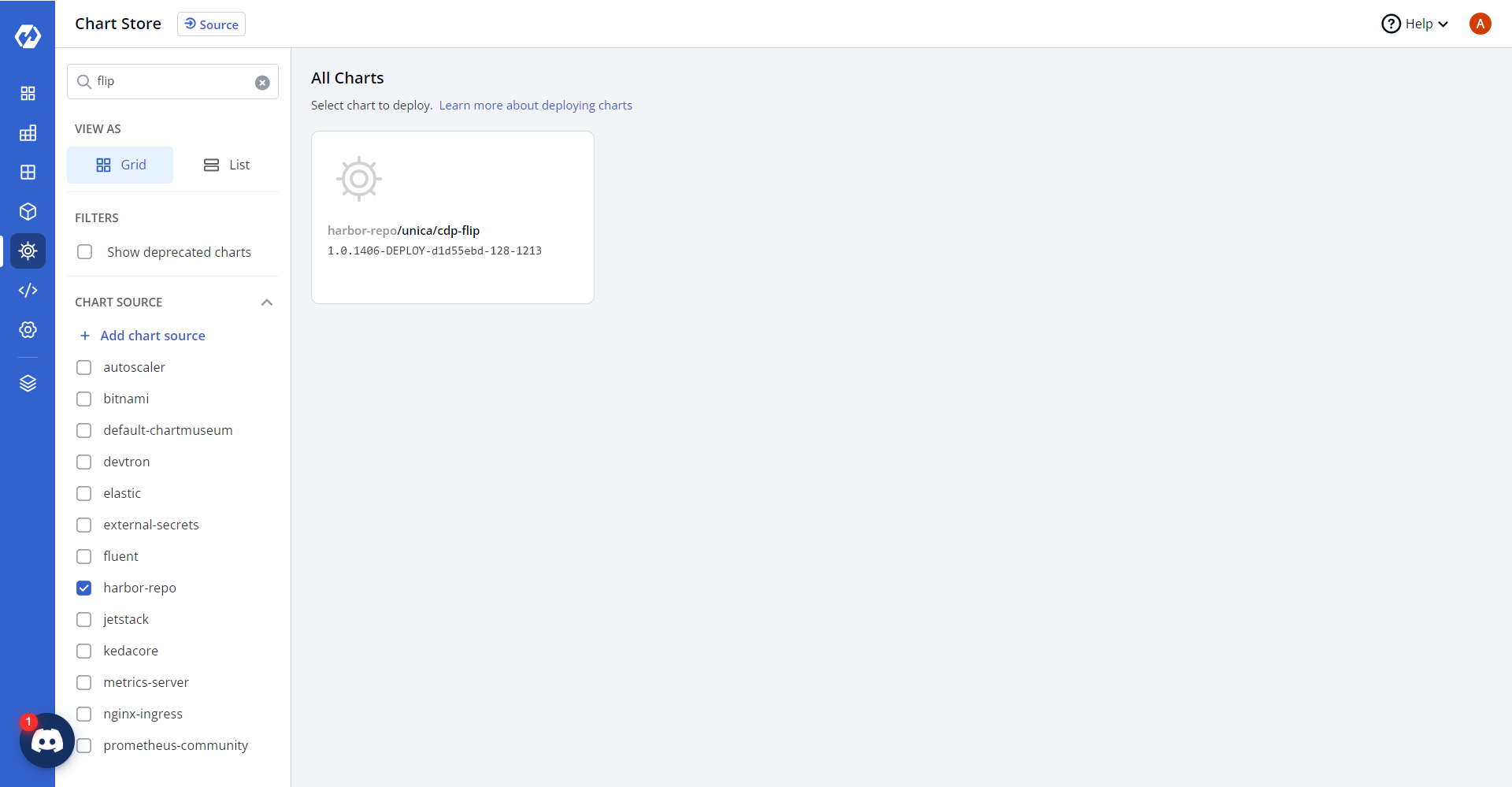

- Navigate to the Devtron Chart Store, and select the cdp-flip chart to

deploy.

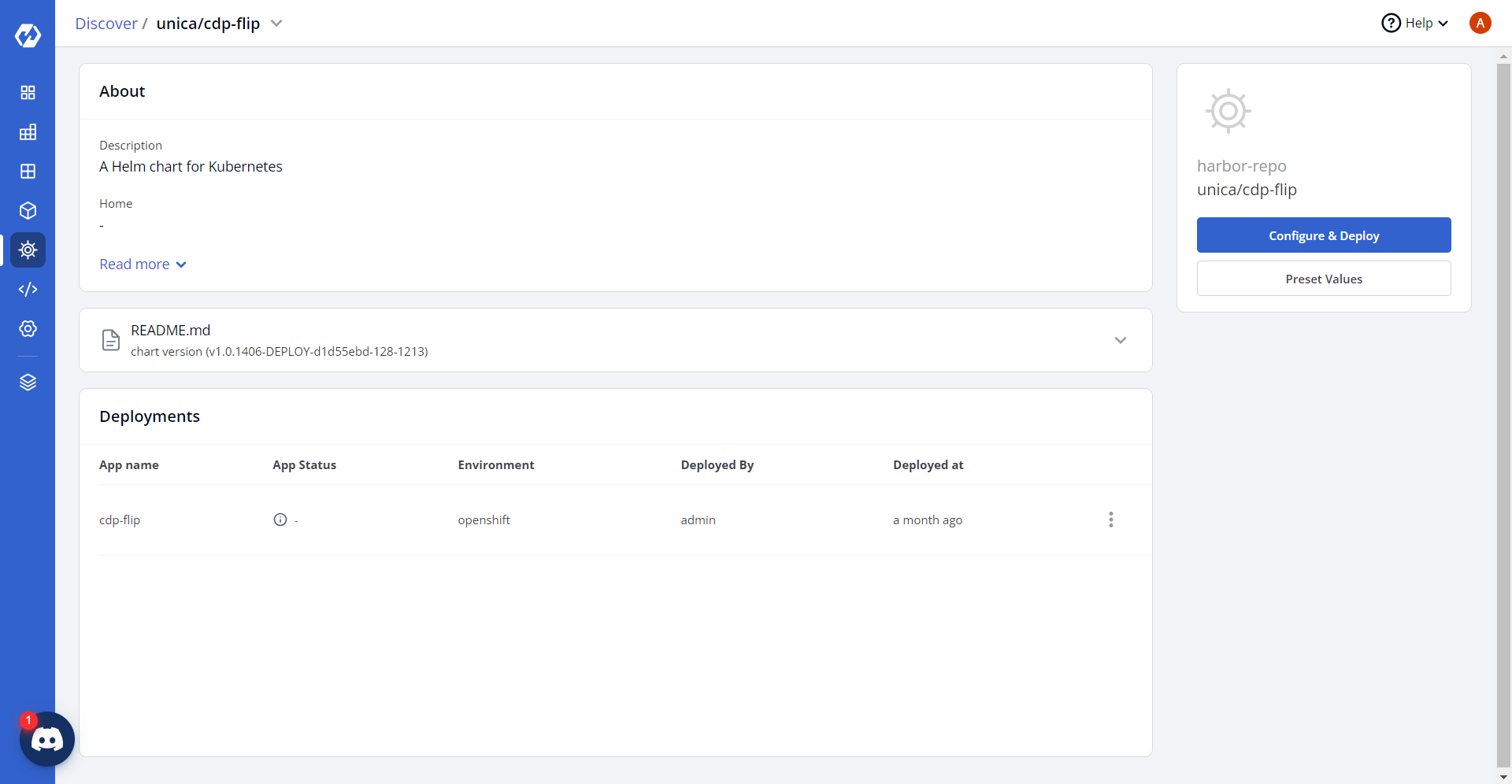

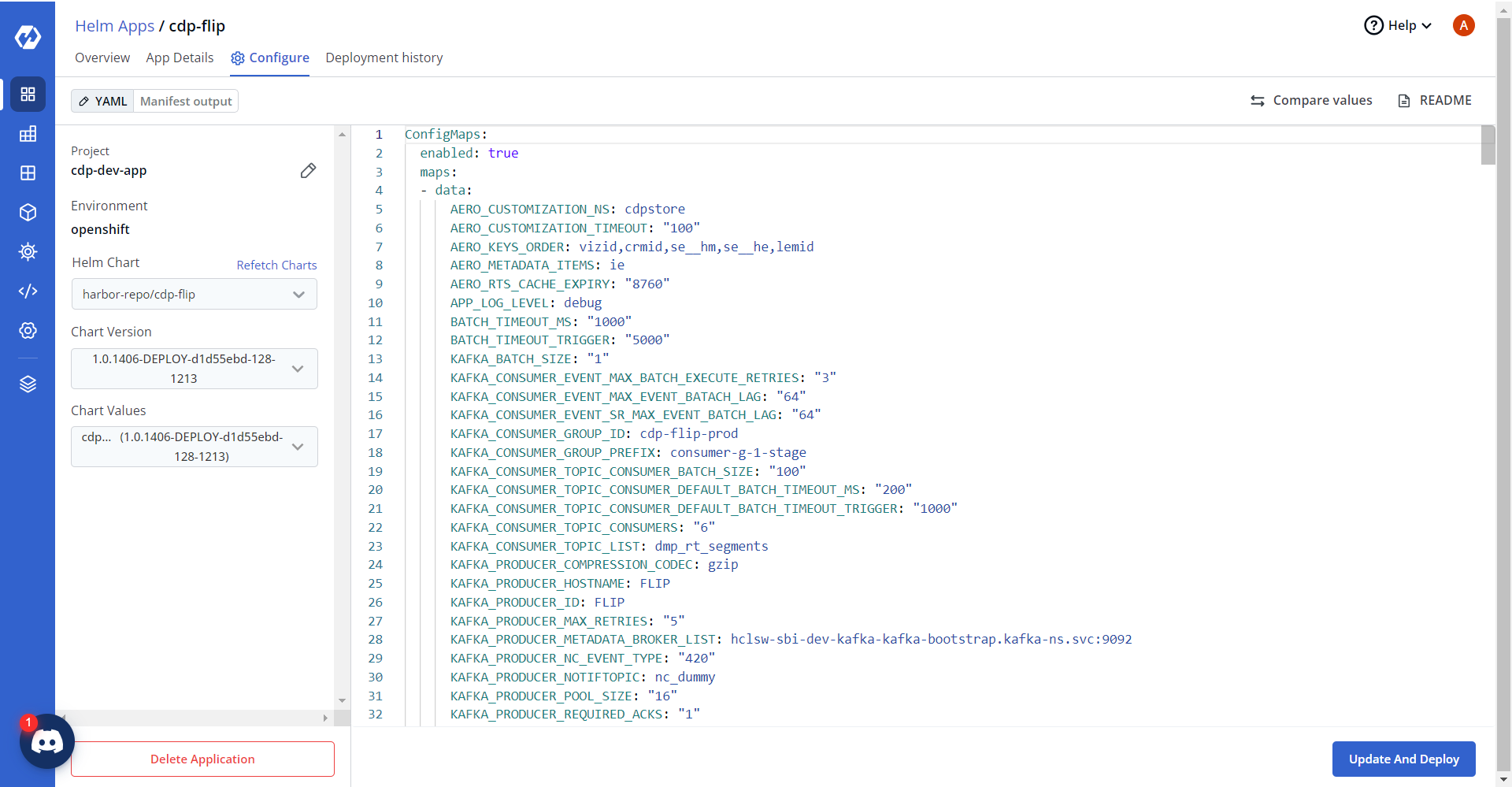

- Now, configure and deploy the cdp-flip charts.

- In the YAML section, update the ConfigMap using the below details and deploy the chart.

AERO_CUSTOMIZATION_NS: cdpstore AERO_CUSTOMIZATION_TIMEOUT: "100" AERO_RTS_CACHE_EXPIRY: "8760" MYSQL_DBDRIVER: org.mariadb.jdbc.Driver MYSQL_REFRESH_DBREFRESHTIMEMIN: "30" MT_PROPERTIES_FLIP_EXECUTOR_SERVICE_MIN_THREADS: "64" MT_PROPERTIES_FLIP_EXECUTOR_SERVICE_MAX_THREADS: "64" MT_PROPERTIES_FLIP_EXECUTOR_SERVICE_THREAD_TTL: "5" MT_PROPERTIES_FLIP_EXECUTOR_SERVICE_QUEUSE_SIZE: "10000000" KAFKA_CONSUMER_GROUP_ID: cdp-flip-prod KAFKA_CONSUMER_GROUP_PREFIX: consumer-g-1-stage KAFKA_REBALANCE_MAX_RETRIES: "60" KAFKA_CONSUMER_TOPIC_LIST: dmp_rt_segments KAFKA_CONSUMER_TOPIC_CONSUMERS: "6" KAFKA_CONSUMER_TOPIC_CONSUMER_BATCH_SIZE: "100" KAFKA_CONSUMER_TOPIC_CONSUMER_DEFAULT_BATCH_TIMEOUT_MS: "200" KAFKA_CONSUMER_TOPIC_CONSUMER_DEFAULT_BATCH_TIMEOUT_TRIGGER: "1000" KAFKA_CONSUMER_EVENT_MAX_EVENT_BATACH_LAG: "64" KAFKA_CONSUMER_EVENT_SR_MAX_EVENT_BATCH_LAG: "64" KAFKA_CONSUMER_EVENT_MAX_BATCH_EXECUTE_RETRIES: "3" KAFKA_PRODUCER_METADATA_BROKER_LIST: <kafka borkers> KAFKA_PRODUCER_SERIALIZER_CLASS: kafka.serializer.StringEncoder KAFKA_PRODUCER_ID: FLIP KAFKA_PRODUCER_REQUIRED_ACKS: "1" KAFKA_PRODUCER_REQUIRED_TIMEOUT: "10000" KAFKA_PRODUCER_TYPE: async KAFKA_PRODUCER_COMPRESSION_CODEC: gzip KAFKA_PRODUCER_MAX_RETRIES: "5" KAFKA_PRODUCER_RETRY_BACKOFF: "1000" KAFKA_PRODUCER_NC_EVENT_TYPE: "420" KAFKA_PRODUCER_HOSTNAME: FLIP KAFKA_PRODUCER_POOL_SIZE: "16" KAFKA_PRODUCER_NOTIFTOPIC: nc_dummy LOG_TSDB_METRIC_LOGGING_FREQUENCY_MS: "60000" LOG_TSDB_MAX_WAIT_FOR_CONNECTION_REESTABLISH: "5" LOG_TSDB_SERVER_ADDRESS: hxcb-cdp-opentsdb-service.cdp-app.svc.cluster.local LOG_TSDB_SERVER_PORT: "80" LOG_TSDB_HOSTNAME: hxcb-cdp-opentsdb-service.cdp-app.svc.cluster.local LOG_GANGLIA_GMOND_COLLECTORS: "" LOG_GANGLIA_GMOND_PORT: "8650" LOG_GANGLIA_HOSTNAME_OVERRIDE: cdpstore LOG_GANGLIA_METRIC_PREFIX: dmpflip AERO_KEYS_ORDER: vizid,crmid,se__hm,se__he,lemid AERO_METADATA_ITEMS: ie APP_LOG_LEVEL: debug KAFKA_BATCH_SIZE: "1" MAX_EVENT_BATCH_LAG: "32" BATCH_TIMEOUT_MS: "1000" BATCH_TIMEOUT_TRIGGER: "5000" KAFKA_TOPIC_CONSUMERS: "6"

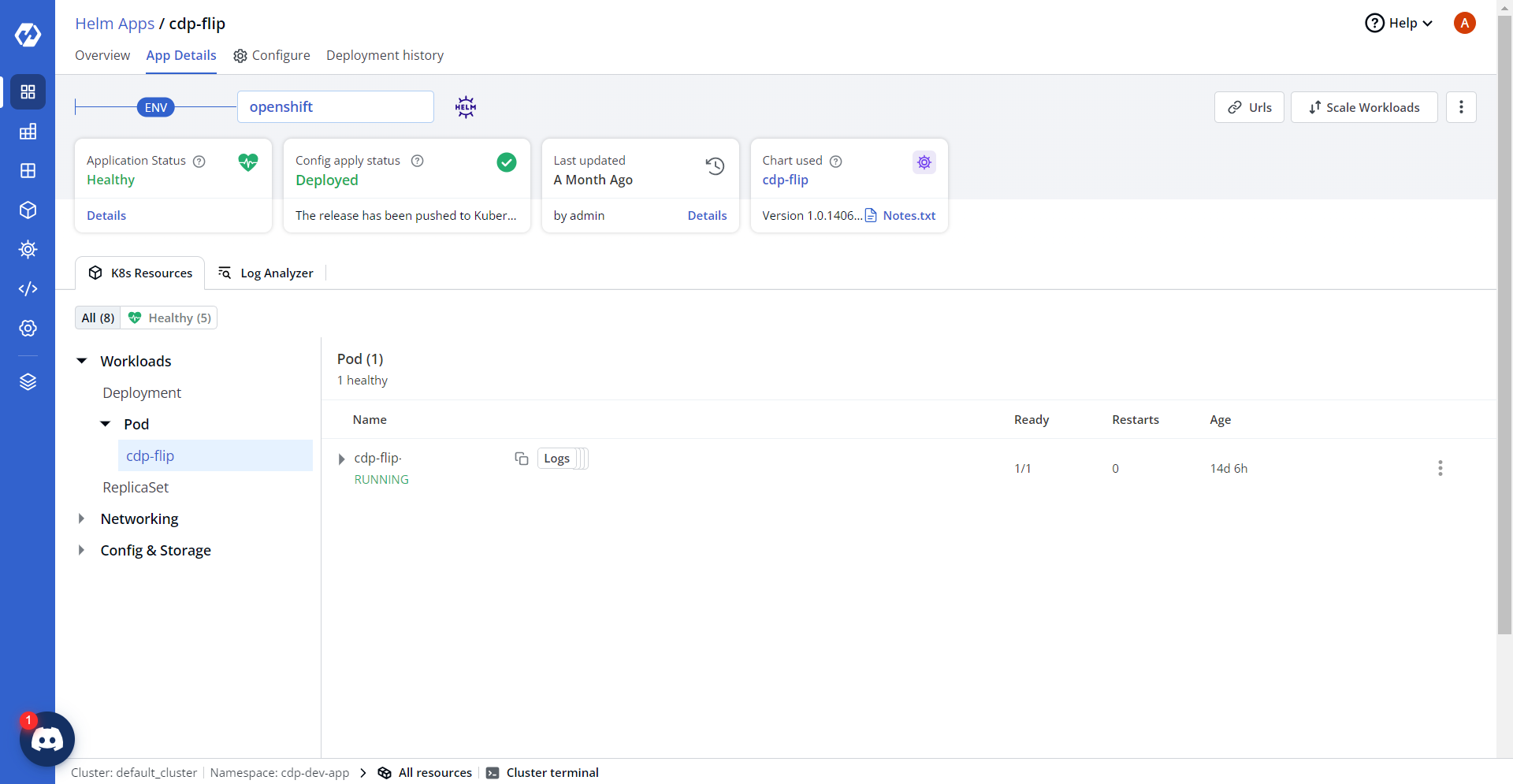

- On successful deployment, validate the deployment as shown below.