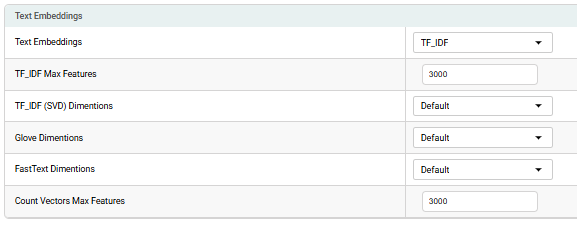

Text Embeddings

Generate dense vector representations of text to capture semantic meaning. Common techniques include:

- Word2Vec & GloVe: Learn word relationships from large corpora.

- FastText: Enhances word representations by incorporating subword information, improving handling of rare and morphologically complex words.

- Transformer-based models (BERT, GPT): Capture contextual word meanings for advanced NLP tasks.