RAG for the Domino IQ server

Retrieval-augmented generation (RAG) is a technique for enhancing the accuracy and reliability of generative AI models with information fetched from relevant data sources like databases. This technique helps in keeping the response from LLMs more grounded, current and domain-specific.

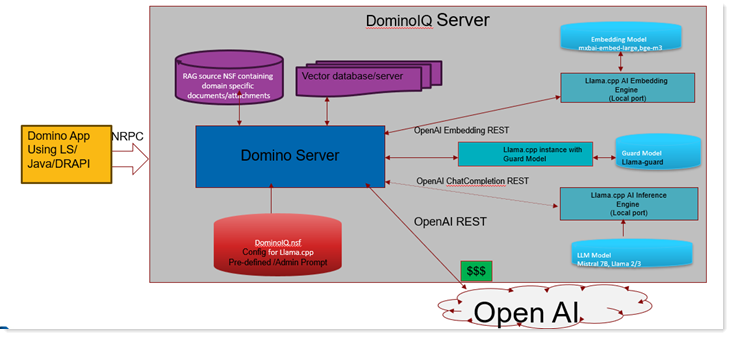

Starting in Domino 14.5.1, RAG support in the Domino IQ server enables a command defined in dominoiq.nsf to use a Domino database as a RAG source database. The specified fields in the RAG source database are converted into embeddings and stored in a local Vector database. When a configured AI command gets invoked on the Domino IQ server from an application via the LLMReq method in LotusScript or Java, the user prompt is semantically searched against the Vector database. The returned matches are retrieved from the RAG source data documents and sent as additional context in the prompt sent to the LLM inference engine.

In Domino 14.5.1, RAG support is available only when the Domino IQ server is configured in Local mode, so the the LLM handling RAG source content is running on the Domino IQ servers configured for RAG.

- A llama-server instance with an LLM inference model if Domino IQ server is enabled for local mode

- A llama-server instance with an embedding model

- A llama-server instance with a guard model (optional)

- A vector database server

All of these processes are launched by the DominoIQ task in the Domino server process.

A database that will be used as a RAG source in a command document must be replicated to the Domino IQ server or servers for which the command is configured in dominoiq.nsf.

Prerequisite

Make sure that the dominoiq.nsf on the Domino IQ Administration server and all Domino IQ servers in the domain are updated with the dominoiq.ntf shipped with 14.5.1.

Set up RAG

To configure RAG and enable a RAG database for Domino IQ, see the procedures that follow.