Creating an Embedding and Vector database Configuration

You can either add a new configuration in dominoiq.nsf or edit an existing configuration document for a given Domino IQ server.

Procedure

- Using the Domino Administrator client, open the Domino IQ database , dominoiq.nsf, on the Domino IQ Administration server.

- Select the Configurations view and click the Add Configuration button, or select an existing configuration document and click the Edit Configuration button.

-

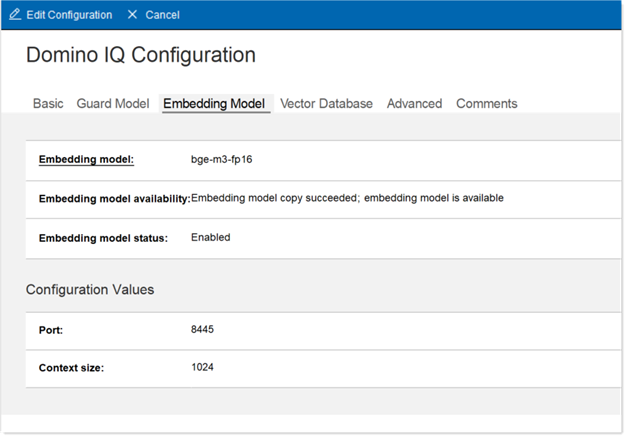

Click the Embedding Model tab.

- In the Embedding Model field, select an available embedding model.

- Set the embedding model status to Enabled.

- Set the port for running the embedding model llama-server to change the default port.

- Change the context size for embedding model if the embedding model chosen supports a higher context size than 1024.

-

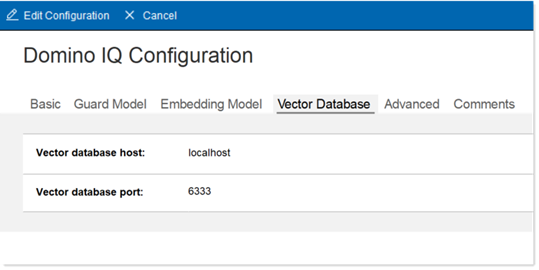

Click on the Vector Database tab.

- Optionally, change the port for the Vector database server. Also optionally change the host if running multiple partitions.

- Click on the Advanced tab.

-

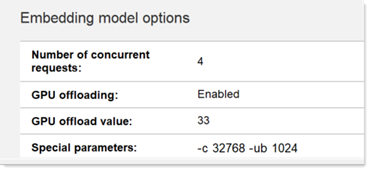

Scroll down to the Embedding model options section and

tune the number of concurrent requests, GPU offloading parameters, and any

special parameters needed to run the embedding model.

- RAG-based commands increase the size of the prompts sent to the LLM. It is recommended that the context size under the LLM options on the Advanced tab be increased, using the Special parameters field. As an example, specifying "-c 65000" with 10 concurrent requests under the LLM Options sections will provide the AI inferencing engine a token context size of 6500 per request.

- Save the Configuration document.