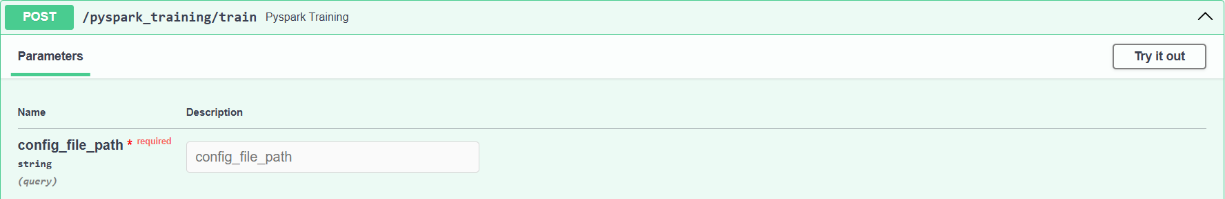

Post: Pyspark Training

Endpoint: /pyspark_training/train

Initiates distributed model training using PySpark over Spark-Hadoop clusters. It enables scalable machine learning workflows (Classification, Regression, Clustering) optimized for handling large datasets (up to ~2 GB), leveraging distributed compute.

Input Parameters:

-

config_file_path (string, required): Absolute path to the configuration file containing training parameters, dataset location (e.g., HDFS/ClickHouse), and algorithm specifications.

Output:

Returns a JSON response with the training job status and metadata (e.g., model storage path, training metrics).