Prerequisites and limitations

This section provides detailed information about the prerequisites and limitations for BigFix 10 Insights.

System Prerequisites

The system requirements for BigFix 10 Insights are as follows:

- An entitlement to install and use BigFix Insights.

- BigFix

Insights requires

a Windows WebUI Server that is subscribed to the BigFix

Insights content

site.Notes:

- Insights consists of two BigFix content sites. The external content site for delivery of sample reports and the WebUI content site

- The WebUI content site must subscribe to the WebUI server that facilitates the ETL. The external content site must subscribe to a computer to retrieve the tableau sample workbooks. These sites collectively must not subscribe to all computers within the environment

- The Linux WebUI is currently not supported

- For WebUI configuration, please refer to https://help.hcltechsw.com/bigfix/10.0/webui/WebUI/Admin_Guide/WebUI_admin_guide.html

- An SQL replica or offline copy of the BigFix Enterprise

Database Ingestion data source.Notes:

- Do not run an Insights ETL against a live BigFix root server. A replica is required to serve as the datasource for BigFix Insights. The replica can be facilitated by a variety of SQL processes, including backups, T-Log shipping, and SQL Always On.

- Insights ETL supports ingesting only from Windows-based root servers (BigFix 10).

- DB2 databases or root servers are not supported

- LINUX SQL currently not supported

- BigFix Insights requires Microsoft SQL Server 2017, SQL Server 2019, or SQL Server 2022 for the Insights Data Lake. Prior versions are not supported

- Insights Data Lake must be generated from a Windows computer that is running WebUI

- To represent the Tableau reports that come with the product, a Tableau license agreement is required to run and view the provided Insights Tableau workbooks. This license is a separate entitlement from Insights. A minimum of one Tableau Creator license is required. You can use the explorer or view licenses to view workbooks

Database Permissions

There are three types of users:

-

Report Users (PowerBI/Tableau): These users require READ ONLY permission, granting SELECT access to all tables in Insights.Note: In multi-datasource scenarios, the Read Only database user may not access all table data due to the Row-Level Security (RLS) policy. Two methods to bypass the RLS security policy and generate reports across all datasources are using the

dbouser or creating a user group called 'sec_rls_excluded' and adding the user into it. -

Insights Creators (INSIGHTS_CREATOR): Insights creators need the following permissions:

- CREATE DATABASE

- ALTER ANY LOGIN

- ALTER ANY LINKED SERVER

- CONNECT SQL

- VIEW SERVER STATE

- INSERT/UPDATE/DELETE/ALTER tempdb

-

Insights Administrators (INSIGHTS_ADMIN): As administrators who use Insights and run regular ETLs, these users require the following permissions:

- ALTER ANY LOGIN

- ALTER ANY LINKED SERVER

- CREATE SCHEMA

- SELECT/INSERT into dbo

- SELECT/INSERT into webui

- ALTER ANY SECURITY POLICY

- INSERT/UPDATE/DELETE/ALTER tempdb

- VIEW SERVER STATE

- CONNECT SQL

Credential prerequisites

The credential prerequisites for the Insights ETL components follow:

| Required rights | Purpose |

|---|---|

| DB Reader to ingestion sources (REPLICAS) | The DB Reader is used to Authenticate and read information from data sources that are ingested by Insights. |

| DBO to Insights | The Insights account is use to maintain the application schema with an ongoing or persistent ETL and to create the Insights database. |

Network prerequisites

As with all data loading applications, minimize and optimize network latency and bandwidth impacts. Align resources within close network proximity, where or when possible. If possible, co-locate replicas with the Insights Data Lake.

| Required rights | Purpose |

|---|---|

|

Insights ETL Server requires clear line of sight to all ingested SQL Instances or Servers (replicas) over the listening SQL port that support BigFix Enterprise. |

Insights Data Lake DB Server >

Ingestion Source DB: <Ingestion Source DB Port> |

|

The server that is running the WebUI instance requires connection to the Insights ETL Server Database by the 1433 port or the SQL listening port. |

WebUI Server >

Insights

Data Lake

DB Server:<Ingestion Source DB Port> |

Application requirements

DBMS prerequisites| Required rights | Purpose |

|---|---|

| Minimum ingestion BigFix Enterprise version | Insights ingests one or more BFE data sources.

|

| Insights ingestion to live BigFix Enterprise deployments is not supported. |

|

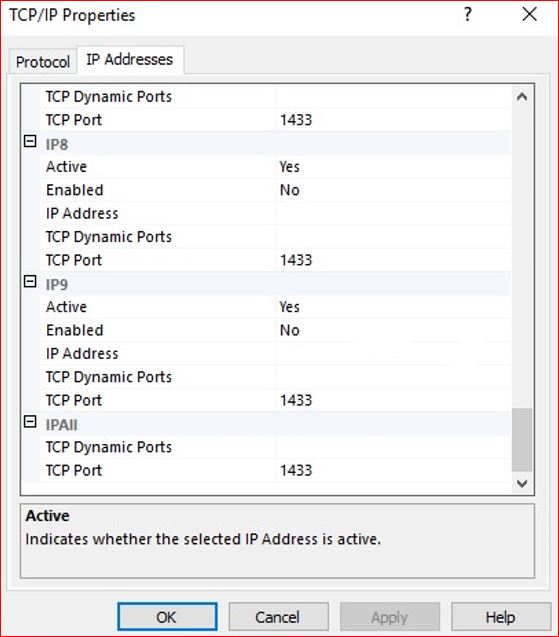

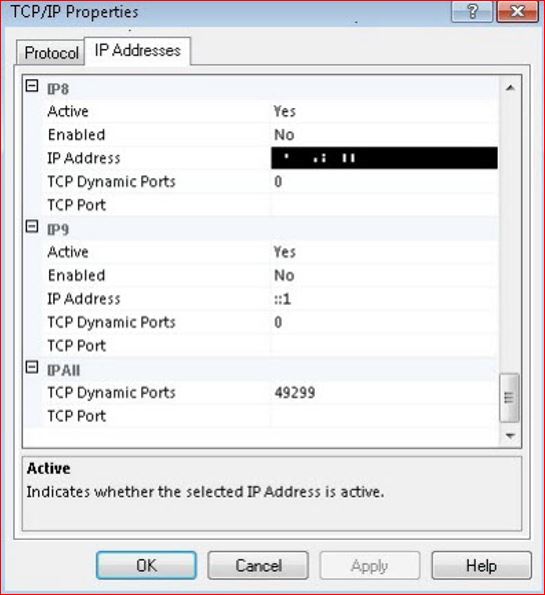

SQL Server Listening Port

By default, SQL Server listens on TCP port number 1433, but for named instances the TCP port is dynamically configured. There are several options available to get the listening port for a SQL Server named instance.

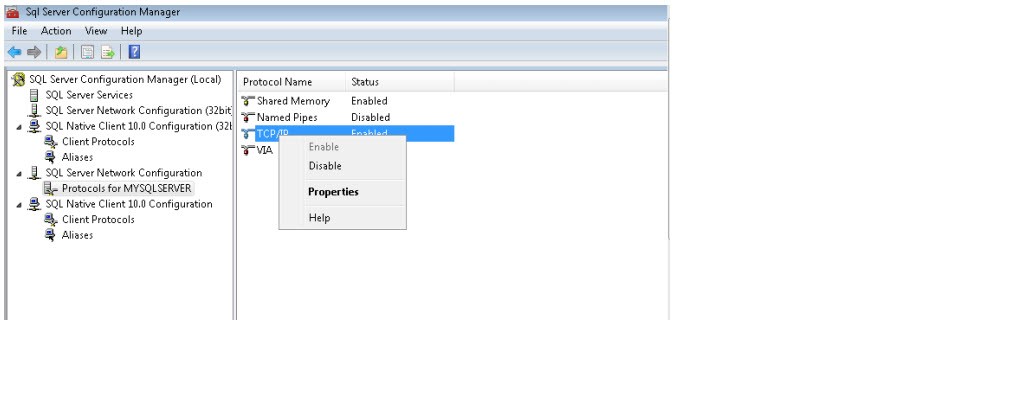

- Click Start > All Programs > Microsoft SQL Server 2008 > Configuration Tools > SQL Server Configuration Manager

- Go to SQL Server Configuration Manager > SQL Server Network Configuration > Protocols for <your Database>

- Right click TCP/IP and select

Properties.

- In the TCP/IP Properties dialog box, go to the IP Addresses tab and scroll

down to the “IPAll”

group.

If the configuration is on a static port, the current port number will be available in the TCP Port text box.

In the above screenshot, the instance listens on port number 1433.If SQL server is configured on a dynamic port, the current port number is available in the TCP Dynamic Ports text box.In the above screenshot, the instance listens on port number 49299.

Compute and memory requirements

The following section is excerpted from the BigFix Capacity Planning guide. Refer to the complete publication: BigFix Performance & Capacity Planning Resources

For best capacity-planning results, base the Insights deployment on a database replica. You have many options for managing the base capacity, such as using database backups, MS SQL replication support, and MS SQL availability groups.

- For an MS SQL replica, additional MS SQL resources are required. See the following table for considerations and note the additional pressure on log space that is required for the replica.

- It is possible to bypass the replica for low-scale and test deployments. However, use care with this approach by using premonitoring and postmonitoring, in particular for the DBMS, to ensure system and database health.

- For input/output operations per second (IOPS), the general DBMS server standard of 5,000 IOPS with less than 1msec latency provides the best results. However, the I/O profile tends to be more read intensive and highly subject to reporting content and workloads. As a result, it might be possible to have good performance with a 2,500-IOPS based storage device. However, this possibility can only be assessed through careful monitoring.

For the Insights server, the capacity planning requirements are for the base BigFix offering.

- The requirements for the base operating system, the MS SQL secondary instance (if applicable), and the customer provided reporting technology (for example, Tableau), and associated data sources must be included.

- For best results, the deployment size is represented in the BigFix Enterprise computers table data cardinality for the initial offering.

The CPU and memory recommendations are based on the Extract-Transform-Load (ETL) agent that ingests the BigFix root server content to build the Insights Data Lake. These requirements are generally low because of the economy of the ETL process.

| Component | Additional CPUs | Additional memory in GB | Log space in GB |

| MS SQL Replication | +2 | +4 | Two times more than the normal space |

| Deployment size | CPU | Memory (GB) | Storage (GB) |

| 10,000 | +4 | +10 | 10% of BFEnterprise |

Workload management and Insights DBMS sizing

Ingestion objects

- Actions

- Sites

- Computers

- Properties

- Property results

- Fixlets (analysis, tasks and fixlets), referred to as "content" in BigFix Insights

- Fixlet results, referred to as "content results" in BigFix Insights

- Groups (Including group membership)

- Operators

- Roles

Global content and linked items

In this release, global object mapping is limited to external content sites, fixlets (and tasks) and analysis. Custom content linking is not within the scope of this release.

In the Insights database, you can import data from one or more BigFix Enterprise Database data sources. This release supports data ingestion from up to 10 data sources, and the total number of endpoints of the ingested data sources must not exceed 250,000.

The concept of global objects amounts to the singular representation of the same object from multiple BigFix Enterprise Database data sources. For example, the BigFix Enterprise Server support site is the same on one data source, many data sources, and every data source.

Sizing concepts

The scalability and size requirements of Insights is directly related to the concepts of data sets with an additional dimension of “rate of change”.

These size of data sets varies for each environment as every environment is unique. For example, the rate of change of an environment with a considerable amount of property-result data impacts the database differently than the one that has a sizeable amount of fixlet data (property results vary in data return, but fixlet data is binary). The varying width of data is substantial between these two data types and impacts the size also. Sizing considerations comes always depend on numerous factors. Because of the dynamic nature of data sources and the number of objects ingested and linked to drives the initial full ETL data set, and the rate of change drives the subsequent sets of changes. Consequently, the approach to sizing focuses primarily on the size of the first full data set. The sets of changes are accommodated as a rate of change (percentage) that is applied as an assumption to the full data set. That assumption drives the projected rate of database growth over subsequent ETL changes. In concept, consider the following categories:

| Name | Description |

|---|---|

| Category 1 ETL (Initial) | Provides the Initial reporting dataset. This dataset has the most impact on the database size over time. |

| Category 2 ETL (point in time and change) | Provides a historical or point-in-time data set. This data set only retrieves the values that have changed since the last ETL. This ETL type is driven by the rate of change in the sourcing data sources. If the rate of change is minimal, the impact of growth is minimal in addition to the time that is required to process an ETL. However, if the rate of change is significant, storage resources and the ETL completion time are affected. |

Some general trends correlate the initial data source size to the resulting Insights database size (after the first ETL). This resulting size can vary. However, the following query provides a rough sizing estimate of the database containers that Insights requires. Run the attached query on the ingesting BigFix Enterprise Database. The returned number is a conservative estimate of what the initial dataset might be after a 20% increase. Ensure that you run the query on all ingesting BigFix Enterprise environments to project the accumulated size of the first ETL.

-- SCRIPT USED to Guestimate the destination size database +- 20% For Full run

Select 1.2* (sum(TotalspaceMB)) as 'Initial Projected DB Container space required (MB)' from

(SELECT

t.NAME AS TableName,

--s.Name AS SchemaName, p.rows,

CAST(ROUND(((SUM(a.total_pages) * 8) / 1024.00), 2) AS NUMERIC(36, 2))

AS TotalSpaceMB,

CAST(ROUND(((SUM(a.used_pages) * 8) / 1024.00), 2) AS NUMERIC(36, 2))

AS UsedSpaceMB,

CAST(ROUND(((SUM(a.total_pages) - SUM(a.used_pages)) * 8) / 1024.00, 2) AS NUMERIC(36, 2)) AS UnusedSpaceMB

FROM

sys.tables t INNER JOIN

sys.indexes i ON t.OBJECT_ID = i.object_id INNER JOIN

sys.partitions p ON i.object_id = p.OBJECT_ID AND i.index_id = p.index_id

INNER JOIN

sys.allocation_units a ON p.partition_id = a.container_id LEFT OUTER JOIN

sys.schemas s ON t.schema_id = s.schema_id WHERE

--t.NAME NOT LIKE 'dt%'

t.is_ms_shipped = 0 AND i.OBJECT_ID > 255

and (

t.name like 'ACTIONRESULTS' OR t.name like 'ACTIONS'

OR t.name like 'ACTIONSTATESTRINGS'

OR t.name like 'COMPUTERS'

OR t.name like 'COMPUTER_GROUPS'

OR t.name like 'COMPUTER_SITES' OR t.name like 'CUSTOM_ANALYSES'

OR t.name like 'CUSTOM_ANALYSIS_PROPERTIES'

OR t.name like 'CUSTOM_FIXLETS'

OR t.name like 'CUSTOM_FIXLET_FIELDS' OR t.name like 'DBINFO'

OR t.name like 'EXTERNAL_ANALYSES'

OR t.name like 'EXTERNAL_ANALYSIS_PROPERTIES'

OR t.name like 'EXTERNAL_FIXLETS'

OR t.name like 'EXTERNAL_FIXLET_FIELDS' OR t.name like 'FIXLETRESULTS'

OR t.name like 'GROUPS'

OR t.name like 'LONGQUESTIONRESULTS'

OR t.name like 'PROPERTIES'

OR t.name like 'QUESTIONRESULTS' OR t.name like 'SITENAMEMAP'

OR t.name like 'SITES'

) GROUP BY

t.Name, s.Name, p.Rows

)

as T1

-- SCRIPT USED to Guestimate the destination size T-Log Container +- 20% For Full run

Select 2.5*(sum(TotalspaceMB)) as 'Initial Projected T-Log Container space required (MB)' from

(SELECT t.NAME AS TableName,

--s.Name AS SchemaName, p.rows,

CAST(ROUND(((SUM(a.total_pages) * 8) / 1024.00), 2) AS NUMERIC(36, 2))

AS TotalSpaceMB,

CAST(ROUND(((SUM(a.used_pages) * 8) / 1024.00), 2) AS NUMERIC(36, 2))

AS UsedSpaceMB,

CAST(ROUND(((SUM(a.total_pages) - SUM(a.used_pages)) * 8) / 1024.00, 2) AS NUMERIC(36, 2)) AS UnusedSpaceMB

FROM sys.tables t INNER JOIN

sys.indexes i ON t.OBJECT_ID = i.object_id INNER JOIN

sys.partitions p ON i.object_id = p.OBJECT_ID AND i.index_id = p.index_id

INNER JOIN

sys.allocation_units a ON p.partition_id = a.container_id LEFT OUTER JOIN

sys.schemas s ON t.schema_id = s.schema_id WHERE

--t.NAME NOT LIKE 'dt%'

t.is_ms_shipped = 0 AND i.OBJECT_ID > 255

and (

t.name like 'ACTIONRESULTS' OR t.name like 'ACTIONS'

OR t.name like 'ACTIONSTATESTRINGS'

OR t.name like 'COMPUTERS'

OR t.name like 'COMPUTER_GROUPS' OR t.name like 'COMPUTER_SITES'

OR t.name like 'CUSTOM_ANALYSES'

OR t.name like 'CUSTOM_ANALYSIS_PROPERTIES'

OR t.name like 'CUSTOM_FIXLETS'

OR t.name like 'CUSTOM_FIXLET_FIELDS' OR t.name like 'DBINFO'

OR t.name like 'EXTERNAL_ANALYSES'

OR t.name like 'EXTERNAL_ANALYSIS_PROPERTIES'

OR t.name like 'EXTERNAL_FIXLETS'

OR t.name like 'EXTERNAL_FIXLET_FIELDS' OR t.name like 'FIXLETRESULTS'

OR t.name like 'GROUPS'

OR t.name like 'LONGQUESTIONRESULTS'

OR t.name like 'PROPERTIES'

OR t.name like 'QUESTIONRESULTS' OR t.name like 'SITENAMEMAP'

OR t.name like 'SITES'

) GROUP BY

t.Name, s.Name, p.Rows

)

as T1

-- SCRIPT USED to Guestimate the TempDB database +- 20% For Full run

Select .5*(sum(TotalspaceMB)) as 'Initial Projected TempDB space required (MB)' from

(SELECT t.NAME AS TableName,

--s.Name AS SchemaName,

p.rows,

CAST(ROUND(((SUM(a.total_pages) * 8) / 1024.00), 2) AS NUMERIC(36, 2))

AS TotalSpaceMB,

CAST(ROUND(((SUM(a.used_pages) * 8) / 1024.00), 2) AS NUMERIC(36, 2))

AS UsedSpaceMB,

CAST(ROUND(((SUM(a.total_pages) - SUM(a.used_pages)) * 8) / 1024.00, 2) AS NUMERIC(36, 2)) AS UnusedSpaceMB

FROM

sys.tables t INNER JOIN

sys.indexes i ON t.OBJECT_ID = i.object_id INNER JOIN

sys.partitions p ON i.object_id = p.OBJECT_ID AND i.index_id = p.index_id

INNER JOIN

sys.allocation_units a ON p.partition_id = a.container_id LEFT OUTER JOIN

sys.schemas s ON t.schema_id = s.schema_id WHERE

--t.NAME NOT LIKE 'dt%'

t.is_ms_shipped = 0 AND i.OBJECT_ID > 255

and (

t.name like 'ACTIONRESULTS' OR t.name like 'ACTIONS'

OR t.name like 'ACTIONSTATESTRINGS'

OR t.name like 'COMPUTERS'

OR t.name like 'COMPUTER_GROUPS' OR t.name like 'COMPUTER_SITES' OR t.name like 'CUSTOM_ANALYSES'

OR t.name like 'CUSTOM_ANALYSIS_PROPERTIES'

OR t.name like 'CUSTOM_FIXLETS'

OR t.name like 'CUSTOM_FIXLET_FIELDS' OR t.name like 'DBINFO'

OR t.name like 'EXTERNAL_ANALYSES'

OR t.name like 'EXTERNAL_ANALYSIS_PROPERTIES'

OR t.name like 'EXTERNAL_FIXLETS'

OR t.name like 'EXTERNAL_FIXLET_FIELDS' OR t.name like 'FIXLETRESULTS'

OR t.name like 'GROUPS'

OR t.name like 'LONGQUESTIONRESULTS'

OR t.name like 'PROPERTIES'

OR t.name like 'QUESTIONRESULTS' OR t.name like 'SITENAMEMAP'

OR t.name like 'SITES'

) GROUP BY

t.Name, s.Name, p.Rows

)

as T1

Scale Implications, Best practices and Optimizations

- Reduce the amount of sites that are ingested (dialog provided per dataset). If you no plans to report on the data history, do not drive the data into Insights.

- After a primary site is added, that data is maintained historically and the data cannot be deleted. Plan the sites you want to import during the initial BigFix Insights setup.

- Plan to run the ETL at a frequency that is useful to business operations. The suggested frequency is once a day.

- Reduce checklist site subscriptions to computers that you intend to evaluate.

System versioned tables

The data warehouse that BigFix Insights creates will leverage SQL Server's system versioned tables in order to keep a historical account of incoming and changing data.

The default retention policy for all temporal tables created by Insights is 36 MONTHS. At present, the only way to modify this is manual. However, it is possible to apply the WebUI setting _WebUIAppEnv_RETENTION_PERIOD to some other supported value (e.g. 100 DAYS). If you apply this setting prior to generating your Insights data lake, then all temporal tables will take this new value instead of the default. If you apply the setting after-the-fact then this will only affect those tables generated thereafter.

[dbo].[device_dimensions_historical]SQL transactions and application behavioral patterns

All transactions use snapshot isolation during importation. From that point, SQL uses a prepared batch statement, and this statement is fed into an upsert. The upsert performs two functions: First is it reads the applicable destination row to see whether an update is required and the second is it inserts or updates the row as required. During importation, the tempdb is heavily used. Data is dumped into the tempdb and then read and processed for updation or insertion.

The end of the importation process, where global objects are computed and stored (warehousing), includes a large read impact (approximately 90%) and small write impact (approximately 10%).

Normal Business Intelligence report running activities generates a large read impact and nearly no write operations.

Considering the previous information, the suggested balance for the Insights DBMS should be optimized around 75 to 25 percent with a slight preference for read based off application usage. For this reason the following specifications are defined.

BigFix Insights SQL configuration guidelines

| Configuration | Description or rationale |

| Make the tempdb I/O channel dedicated and not shared. | Insights leverages the tempdb Heavily, Isolate TempDB when possible from other workloads. |

| Configure the SQL memory limitations correctly. | Ensure that SQL memory is capped to allow at least 8 GB for the operating system. This cap is configured in the SQL Server properties. |

| Configure virus scanners to exclude the SQL file storage location, including all data file sets. | When you configure antivirus software settings, exclude the following files or directories (as applicable) from virus scanning. This exclusion improves the performance of the files and make sure that the files are not locked when the SQL Server service must use them. Refer to instructions link from your virus scanner for more information on how to set this exclusion rule. For information about choosing antivirus software, see How to choose antivirus software to run on computers that are running SQL Server |

| Do not use file indexing or file compression on supporting SQL data files. | Apply the reasoning that is similar to the reasoning behind antivirus file exclusions to HIPS (Host Intrusion Prevention) based applications or file indexing operations that might lock the data files that are in use. |

| Initially, establish the sizes of the transaction log and the MDF file to 80 percent of the size projection, and set auto growth appropriately. | By using the provided sizing information, make sure the MDF and LDF data files are initially established prior to setting up the initial database. With this setup, the system can minimize auto growth during the initial ETL. |

| Configure auto growth of SQL Database files to be substantial versus minimal. | The supporting DB file can become quite large in SQL in support of the Insights database. To minimize the time and resources that the system dedicates to the growing supporting data files, assure the growth characteristics are altered from the default settings for auto-growth to be no less than 2GB or by 10 per every time. In most cases the percentage approach yields the best results. |

| Ensure that Soft-NUMA is in place. | According to Microsoft, SQL 2017 and 2019 do not use Soft-NUMA and manages NUMA configurations by default. Ensure that this setting is altered from the default setting. For details, refer to Soft-NUMA (SQL Server) |

ETL rhythm and scheduling

Managing the replica

BigFix Insights relies on a replica of the BigFix Enterprise Data Source that is co-located with the Insights database. The WebUI Component of Insights is located on a designated WebUI Server, which is likely one of the ingested BigFix Enterprise Servers. The BigFix Insights database can also be co-located on the same WebUI Server. No technical controls prevent you from the putting the database on the WebUI Server. The WebUI Server maintains communication process to the BigFix Insights Database, while all ingested information and audit details are stored on the Insights database. The Insights database might consume more than one BigFix Enterprise Database, and if co-located on the WebUI Server, it might expand the information boundary to other BigFix Enterprise Data Sources on the Insights Database.

You must have backups of existing BigFix Enterprise Databases to reduce potential downtime and improve recovery intervals. You can use automation to periodically backup the BigFix Enterprise Databases from the primary BigFix Server and restore backup sets on another SQL server as a snapshot in time and replica. You can generate a snapshot and replica a number of ways, depending on the available tools and utilities.

The following list describes one backup method that uses common tool sets:

- BigFix Server: - Periodic database backup (BigFix Enterprise and BigFix Enterprise Server Reporting).

- Primary BigFix Server: Periodic scheduled task. Generate Robocopy Database backups and BigFix Enterprise Server backups to standby server.

- BigFix replica server: Periodic database restore (BigFix Enterprise and BigFix Enterprise Server Reporting).

BigFix Server - Periodic Database backup (BFEnterprise)

USE [msdb]

GO

/****** Object: Job [BigFix Database Backups] 13:58:51 ******/

BEGIN TRANSACTION

DECLARE @ReturnCode INT SELECT @ReturnCode = 0

/****** Object: JobCategory [Database Maintenance] Script Date: 11/16/2012 13:58:51 ******/

IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'Database Maintenance'

AND category_class=1) BEGIN EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB',

@type=N'LOCAL', @name=N'Database Maintenance'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

DECLARE @jobId BINARY(16)

EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'BigFix Database Backups',

@enabled=1,

@notify_level_eventlog=0, @notify_level_email=0, @notify_level_netsend=0, @notify_level_page=0, @delete_level=0,

@description=N'No description available.', @category_name=N'Database Maintenance',

@owner_login_name=N'BIGFIXVM\Administrator', @job_id = @jobId OUTPUT IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [Backup BESReporting] Script Date: 11/16/2012 13:58:52 ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Backup BESReporting',

@step_id=1, @cmdexec_success_code=0, @on_success_action=3, @on_success_step_id=0, @on_fail_action=3, @on_fail_step_id=0, @retry_attempts=0, @retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'-----------------------------------------------------------------

-- Backup database to file

declare @backupFileName varchar(100), @backupDirectory varchar(100), @databaseDataFilename varchar(100), @databaseLogFilename varchar(100), @databaseDataFile varchar(100), @databaseLogFile varchar(100), @databaseName varchar(100), @execSql varchar(1000)

-- Set the name of the database to backup

set @databaseName = ''BESReporting''

-- Set the path fo the backup directory on the sql server pc set @backupDirectory =

''C:\Program Files\Microsoft SQL Server\MSSQL10.MSSQLSERVER\MSSQL\Backup\''

-- such as ''c:\temp\''

-- Create the backup file name based on the backup directory, the database name and today''s date

set @backupFileName = @backupDirectory + @databaseName + ''-'' + replace(convert(varchar, getdate(), 110), ''-'', ''.'') + ''.bak''

-- Get the data file and its path

select @databaseDataFile = rtrim([Name]), @databaseDataFilename = rtrim([Filename])

from master.dbo.sysaltfiles as files inner join

master.dbo.sysfilegroups as groups on

files.groupID = groups.groupID where DBID = (

select dbid

from master.dbo.sysdatabases where [Name] = @databaseName

)

-- Get the log file and its path

select @databaseLogFile = rtrim([Name]), @databaseLogFilename = rtrim([Filename])

from master.dbo.sysaltfiles as files where DBID = (

select dbid

from master.dbo.sysdatabases

where [Name] = @databaseName

)

and groupID = 0 set @execSql = ''

backup database ['' + @databaseName + ''] to disk = '''''' + @backupFileName + '''''' with

noformat, noinit, name = '''''' + @databaseName + '' backup'''', skip,

compression''

exec(@execSql)', @database_name=N'master', @flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [Backup BFEnterprise] Script Date: 11/16/2012 13:58:52 ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Backup BFEnterprise',

@step_id=2, @cmdexec_success_code=0, @on_success_action=1, @on_success_step_id=0, @on_fail_action=2, @on_fail_step_id=0, @retry_attempts=0, @retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'-----------------------------------------------------------------

-- Backup database to file

declare @backupFileName varchar(100), @backupDirectory varchar(100), @databaseDataFilename varchar(100), @databaseLogFilename varchar(100), @databaseDataFile varchar(100), @databaseLogFile varchar(100), @databaseName varchar(100), @execSql varchar(1000)

-- Set the name of the database to backup set @databaseName = ''BFEnterprise''

-- Set the path fo the backup directory on the sql server pc set @backupDirectory =

''C:\Program Files\Microsoft SQL Server\MSSQL10.MSSQLSERVER\MSSQL\Backup\''

-- such as ''c:\temp\''

-- Create the backup file name based on the backup directory, the database name and today''s date

set @backupFileName=@backupDirectory + @databaseName+''-'' + replace(convert(varchar, getdate(), 110), ''-'', ''.'')+''.bak''

-- Get the data file and its path

select @databaseDataFile = rtrim([Name]), @databaseDataFilename = rtrim([Filename])

from master.dbo.sysaltfiles as files inner join

master.dbo.sysfilegroups as groups on

files.groupID = groups.groupID where DBID = (

select dbid

from master.dbo.sysdatabases

where [Name] = @databaseName

)

-- Get the log file and its path

select @databaseLogFile = rtrim([Name]), @databaseLogFilename = rtrim([Filename])

from master.dbo.sysaltfiles as files where DBID = (

select dbid

from master.dbo.sysdatabases where [Name] = @databaseName

)

and

groupID = 0

set @execSql = ''

backup database ['' + @databaseName + ''] to disk = '''''' + @backupFileName + '''''' with

noformat, noinit,

name = '''''' + @databaseName + '' backup'''', skip,

compression''

exec(@execSql)', @database_name=N'master', @flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id

= 1

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule @job_id=@jobId, @name=N'Backup Schedule',

@enabled=1, @freq_type=4, @freq_interval=1, @freq_subday_type=1, @freq_subday_interval=0,

@freq_relative_interval=0, @freq_recurrence_factor=0, @active_start_date=20121113, @active_end_date=99991231, @active_start_time=20000, @active_end_time=235959,

@schedule_uid=N'16b08a53-4276-4cf4-8d40-d33ff7794db5'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name

= N'(local)'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback COMMIT TRANSACTION

GOTO EndSave QuitWithRollback:

IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION

EndSave:

GO

Primary BigFix Server – Periodic Scheduled Task: Robocopy Database and BES Server backups to Standby Server

forfiles -p "C:\Program Files\Microsoft SQL Server\MSSQL10.MSSQLSERVER

\MSSQL\Backup" -m *.* -d -7 -c "cmd /C echo @path" >> C:\maintenance\deleted.txt

forfiles -p "C:\Program Files\Microsoft SQL Server\MSSQL10.MSSQLSERVER

\MSSQL\Backup"

-m *.* -d -7 -c "cmd /c del /q @path"

robocopy "C:\Program Files\Microsoft SQL Server\MSSQL10.MSSQLSERVER\MSSQL

\Backup"

"\\Replica\c$\dbbackups" /s /z /np /w:1 /r:1 /mir /log:C:\Maintenance

\robocopylog.txt /TEE /maxage:3

BigFix replica Server – Periodic Database restore (BFEnterprise)

USE [msdb]

GO

/****** Object: Job [Restore BigFix Databases] Script Date: 11/16/2012 14:07:56 ******/

BEGIN TRANSACTION

DECLARE @ReturnCode INT SELECT @ReturnCode = 0

/****** Object: JobCategory [Database Maintenance] Script Date:

11/16/2012 14:08:32 ******/

IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'Database Maintenance' AND category_class=1)

BEGIN

EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB', @type=N'LOCAL', @name=N'Database Maintenance'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

DECLARE @jobId BINARY(16)

EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'Restore BigFix Databases',

@enabled=1, @notify_level_eventlog=0, @notify_level_email=0, @notify_level_netsend=0, @notify_level_page=0, @delete_level=0,

@description=N'No description available.', @category_name=N'Database Maintenance', @owner_login_name=N'DSSSUA\Administrator', @job_id = @jobId OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [Restore BESReporting] Script Date: 11/16/2012 14:10:28 ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Restore BESReporting',

@step_id=1, @cmdexec_success_code=0, @on_success_action=3, @on_success_step_id=0, @on_fail_action=3, @on_fail_step_id=0, @retry_attempts=0, @retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'-----------------------------------------------------------------

-- Restore database from file

use master go

declare @backupFileName varchar(100), @restoreDirectory varchar(100), @databaseDataFilename varchar(100), @databaseLogFilename varchar(100),

@databaseDataFile varchar(100), @databaseLogFile varchar(100), @databaseName varchar(100), @execSql nvarchar(1000)

-- Set the name of the database to restore set @databaseName = ''BESReporting''

-- Set the path to the directory containing the database backup set @restoreDirectory = ''C:\DBBackups\'' -- such as ''c:\temp\''

-- Create the backup file name based on the restore directory, the database name and today''s date

set @backupFileName=@restoreDirectory+@databaseName + ''-'' + replace(convert(varchar, getdate(), 110),''-'',''.'')+ ''.bak''

-- Get the data file and its path

select @databaseDataFile = rtrim([Name]), @databaseDataFilename = rtrim([Filename])

from master.dbo.sysaltfiles as files inner join

master.dbo.sysfilegroups as groups on

files.groupID = groups.groupID where DBID = (

select dbid

from master.dbo.sysdatabases where [Name] = @databaseName

)

-- Get the log file and its path

select @databaseLogFile = rtrim([Name]), @databaseLogFilename = rtrim([Filename])

from master.dbo.sysaltfiles as files where DBID = (

select dbid

from master.dbo.sysdatabases where [Name] = @databaseName

)

and

groupID = 0

-- Create the sql to kill any active database connections set @execSql = ''''

select @execSql = @execSql + ''kill '' + convert(char(10), spid) + '' '' from master.dbo.sysprocesses

where db_name(dbid) = @databaseName and

DBID <> 0

and

spid <> @@spid exec (@execSql)

set @execSql = ''

restore database ['' + @databaseName + ''] from disk = '''''' + @backupFileName + '''''' with

file = 1,

move '''''' + @databaseDataFile + '''''' to '' + '''''''' + @databaseDataFilename + '''''',

move '''''' + @databaseLogFile + '''''' to '' + '''''''' + @databaseLogFilename + '''''',

replace''

exec sp_executesql @execSql', @database_name=N'master', @flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [Restore BFEnterprise] Script Date: 11/16/2012 14:10:34 ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Restore BFEnterprise',

@step_id=2, @cmdexec_success_code=0, @on_success_action=1, @on_success_step_id=0, @on_fail_action=2, @on_fail_step_id=0, @retry_attempts=0, @retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'-----------------------------------------------------------------

-- Restore database from file

use master go

declare @backupFileName varchar(100), @restoreDirectory varchar(100), @databaseDataFilename varchar(100), @databaseLogFilename varchar(100), @databaseDataFile varchar(100), @databaseLogFile varchar(100), @databaseName varchar(100), @execSql nvarchar(1000)

-- Set the name of the database to restore set @databaseName = ''BFEnterprise''

-- Set the path to the directory containing the database backup set @restoreDirectory = ''C:\DBBackups\'' -- such as ''c:\temp\''

-- Create the backup file name based on the restore directory, the database name and today''s date

set @backupFileName = @restoreDirectory + @databaseName + ''-'' + replace(convert(varchar, getdate(), 110), ''-'', ''.'') + ''.bak''

-- Get the data file and its path

select @databaseDataFile = rtrim([Name]), @databaseDataFilename = rtrim([Filename])

from master.dbo.sysaltfiles as files inner join

master.dbo.sysfilegroups as groups on

files.groupID = groups.groupID where DBID = (

select dbid

from master.dbo.sysdatabases where [Name] = @databaseName

)

-- Get the log file and its path

select @databaseLogFile = rtrim([Name]), @databaseLogFilename = rtrim([Filename])

from master.dbo.sysaltfiles as files where DBID = (

select dbid

from master.dbo.sysdatabases where [Name] = @databaseName

)

and

groupID = 0

-- Create the sql to kill any active database connections

set @execSql = ''''

select @execSql = @execSql + ''kill '' + convert(char(10), spid) + '' '' from master.dbo.sysprocesses

where db_name(dbid) = @databaseName and

DBID <> 0

and

spid <> @@spid exec (@execSql)

set @execSql = ''

restore database ['' + @databaseName + ''] from disk = '''''' + @backupFileName + '''''' with

file = 1,

move '''''' + @databaseDataFile + '''''' to '' + '''''''' + @databaseDataFilename + '''''',

move '''''' + @databaseLogFile + '''''' to '' + '''''''' + @databaseLogFilename + '''''',

replace''

exec sp_executesql @execSql', @database_name=N'master', @flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id

= 1

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule @job_id=@jobId,

@name=N'Restore Schedule', @enabled=1,

@freq_type=4,

@freq_interval=1, @freq_subday_type=1, @freq_subday_interval=0, @freq_relative_interval=0, @freq_recurrence_factor=0, @active_start_date=20121113, @active_end_date=99991231, @active_start_time=50000, @active_end_time=235959,

@schedule_uid=N'1fd12e1c-1a21-49d3-b57a-e8195c980b29'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name

= N'(local)'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback COMMIT TRANSACTION

GOTO EndSave QuitWithRollback:

IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION

EndSave:

GO

Appending SQL and SAN

SAN considerations

You have to consider several factors when you install and configure an SQL Server to a SAN based environment. You must be familiar with both SQL and SAN.

Make sure that you familiarize yourself with the fundamentals of SQL Servers, when you install a SAN. Whether or not a SAN is being used, it is important to have a good knowledge of the hardware that SQL files are being stored on. The configuration values must not conflict with the fundamentals.

- All SAN hardware must comply with SQL Server I/O basics. The write ordering

needs must be maintained, which means that writes must always be secured in

or on stable media and you must take precautions to prevent torn I/O write

actions. The write ordering process must be available from the selected

manufacturer or the manufacturer's SAN documentation.

- Stable media refers to storage that can survive system restart or common failure and is physical disk storage, but SAN caches also fall under this definition. Stable media is an SQL Server requirement, and the server relies on stable media for Write Ahead Logging to maintain the ACID properties of the database and to ensure data integrity. Stable media strategies must be supported by the hardware manufacturer.

- Write ordering is the ability to preserve the order of write operations and is a requirement of SQL Server on Hardware. SQL Server requires write ordering to be maintained for local and remote I/O targets. If write ordering is not maintained, the system breaks the write ahead logging protocol.

- Torn I/O refers to a situation where a partial write takes place, leaving the data in an invalid state. SQL Server documents this as a "Torn Page." Torn page or I/O prevention means that the disk system must provide sector alignment and sizing in a way that prevents torn I/O, including splitting I/O among various I/O entities in the I/O path.

SAN and RAID configurations

SAN hardware supports zoning, which an admin can use to dedicate full physical disks to a LUN so that the I/O is isolated from the SQL Server. SQL Server performance depends on read/write access to physical disks. So, basic RAID fundamentals are important when you deploy servers on a SAN. SQL is an I/O intensive application, and the highest priority for performance should be given to the SQL Servers disk configurations. Microsoft recommends to separate SQL log files from data files at a physical disk level. In a SAN environment, this means separating the database log files to LUNs, which have dedicated physical disks, from those that are not shared with other LUNs, including those that are used for other database logs, data, or index files. A write response time of 2 - 3 ms and a read response time no greater than 20ms is the goal for this configuration according to Microsoft best practices. Isolate physical disks that servers or applications share, because different servers that run different applications with different I/O characteristics might cause problems.

Further considerations

On large database servers that use SANS, isolate the TempDB, Logs, and database file subsystems from the physical disk space requirements. Because large database engines usually use TempDBs and transaction logs, additional consideration must given to separate an SQL TempDB or transaction log files to its own RAID subsystem, preferably RAID 10. The Microsoft SQL best practice typically recommends RAID 1+0 for MDF and Index Files, and Microsoft does not recommended RAID5 because of increased read/write times that are associated with it. Based on Microsoft recommendations use a 250Kb stripe size. Never set stripe sizes lower than 64Kb because that setting is the size of a single SQL extent.

Storage requirements

It is an advantage to have distinct storage subsystems and controllers for specific components. For best results, set up discrete storage subsystems for each of the bases, the database logs, and the database containers. The database logs are typically characterized by the need for very fast sequential I/O. The database containers can have much more diverse access patterns, but are highly insulated from storage impact because of the database buffer pools.

Known issues and limitations

Insights data lake- Datasource authentication is currently limited to SQL authentication.

- Linked objects is limited to external sites, Fixlets, tasks, and

analysis.Note: Custom sites are not supported.

- BigFix Enterprise Server operators, roles, and unmanaged assets are not ingested by Insights.

- Insights supports ingesting data from eight or fewer BigFix Enterprise databases, depending on performance qualification.

- The performance of the Tableau reports varies when they display data for a larger set of devices.

- You can use Tableau sample reports are only on an BigFix Insights database that has completed an ETL. The reports run as though an ETL is scheduled daily.