Improving test script robustness

Sometimes the recorded steps in a test cannot be recognized when the test is played back, thus leading to test failures or errors. To avoid these object recognition issues, you can change the manner in which objects are identified, you can use object locator conditions, or apply responsive design conditions, or add a list of preferred properties to be used during the test run. You can also perform asynchronous user actions for the steps playback to improve the automated test reliability. That way, you improve test robustness and improve the chances that the test can be included in an automated testing process.

One reason for step failure in a test is when a version of an application is updated. You record a test with one version of an application. When you reuse a test on a newer version of the application, which has new buttons, for example, or new object locations, these objects cannot be found when the test is played back. Another reason for step failure is that data in the test has changed from the time the test was recorded (for example, the date).

- By modifying the way the objects are identified in a test and choosing a more

appropriate identifier:

- You can change the property and value used to identify the target object on a step so that it can be found more easily when the test is replayed. See OBJECT PROPERTIES.

- You can modify a step target by using an image as the main property or modify the generated image. See IMAGE RECOGNITION IN A TEST.

- You can replace a recognition property with a regular expression in a verification point.

- By adding object locator conditions in a step to find the target object when the test is played back. See OBJECT LOCATION IN A TEST.

- By applying Responsive Design conditions to actions that should be played back only once. See RESPONSIVE DESIGN CONDITION.

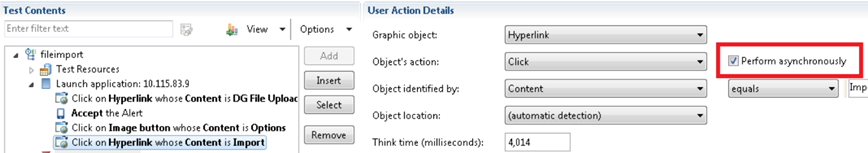

- By selecting Perform asynchronously while selecting click, hover or Press Enter actions.

OBJECT PROPERTIES

Object properties are captured during test recording and displayed in read-only mode in the Properties table of the UI Test Data view. To find an object in the application-under-test during playback, HCL OneTest™ UI compares the properties of the object that are captured during the recording with the description of the properties that are displayed in the User Action Details area of the test editor. These properties are different for Android, iOS, or Web UI applications.

When you select a step in a recorded test, the test editor displays the object properties on which an action is performed. The object properties are listed in the Object identified by field, followed by the operator field and an insert field for the property value. Among the standard object identifiers, you can find Content, Class, Id, 'Xpath depending on the graphic object.

You can change these parameters (property, operator, property value) in the User Action Details area of the test editor or from the UI Test Data view by using the context menu. When actions are selected in the Test Contents list, the UI Test Data view is automatically synchronized to display the screen capture for the step selected. Properties can be modified in the Screen Capture tab, in the Elements tab, or in the Properties table by using the context menu.

To improve the object identification, specify the properties to be used in the test. Some applications are using properties that are described by custom attributes, and they are not automatically detected at the test run. To overcome this standard behavior, you can set an ordered list of the custom attributes so that they are identified as the main properties and used during the test execution.

OBJECT PROPERTIES

Object properties are captured during test recording and displayed in read-only mode in the Properties table of the Mobile and Web UI Data view. To find an object in the application-under-test during playback, HCL OneTest™ UI compares the properties of the object that are captured during the recording with the description of the properties that are displayed in the User Action Details area of the test editor. These properties are different for Android, iOS, or Web UI applications.

When you select a step in a recorded test, the test editor displays the object properties on which an action is performed. The object properties are listed in the Object identified by field, followed by the operator field and an insert field for the property value. Among the standard object identifiers, you can find Content, Class, Id, 'Xpath depending on the graphic object.

You can change these parameters (property, operator, property value) in the User Action Details area of the test editor or from the UI Test Data view by using the context menu. When actions are selected in the Test Contents list, the UI Test Data view is automatically synchronized to display the screen capture for the step selected. Properties can be modified in the Screen Capture tab, in the Elements tab, or in the Properties table by using the context menu.

To improve the object identification, specify the properties to be used in the test. Some applications are using properties that are described by custom attributes, and they are not automatically detected at the test run. To overcome this standard behavior, you can set an ordered list of the custom attributes so that they are identified as the main properties and used during the test execution.

OBJECT LOCATION IN A TEST

When a test is run, the graphical objects in the test must be detected automatically, but in some cases, the element on which the action is performed might be difficult to identify. In this case, you must update the test script and give more accurate information to locate the object on which you want to perform the action.

Here is an example: You record a test, and one step is 'Click on Edit text whose content is 'August 30th, 2013'. If the test is played back automatically, it will fail if the date is no longer August 30, 2013. You must modify the step and give more accurate information to locate the object on which you want to perform the action. That way, the object can be found and used automatically when the test is run. HCL OneTest™ UI offers various ways to identify and locate objects and increase test script reliability.

In HCL OneTest™ UI, various object location operators are available to identify objects in an application-under-test. They are displayed in the Object location fields in the User Action Details area of the test editor. Two object locations can be used in a test step to set location conditions and find the target object in the test. For details, see Setting object location conditions in a test script.

IMAGE RECOGNITION IN A TEST

When a test is recorded, the object on which an action is performed is identified by its main property, which is usually a text property. Sometimes, text properties are not easily identifiable. This can be the case when there is no property description or no label to identify the target element in a test step. In such cases, the test generator uses an image property to identify the elements on the test steps.

To fix possible image recognition issues, HCL OneTest™ UI uses image correlation to recognize and manage objects during playback. The image on which the action is performed (the reference image) is captured during the test recording and compared with the image of the application-under-test at playback (candidate image). A recognition threshold is used to accept an adjustable rate of differences between the reference image and the candidate image, and evaluate if the images match. The default recognition threshold is set to 80, and the default tolerance ratio is set to 20.

- You record a test on a mobile phone, and play it back on a desktop. Because the image width and height change from one device to another, test playback fails on devices that do not have the same screen ratio.

- Some target objects in the recording are no longer the same when the test is played back. Example: When a virtual keyboard is used in a secure application, the position of the digit buttons can change from one session of the server to the next.

.

.If the threshold is set to 0, the candidate image that is most similar to the reference image will be selected, even if it is not the same one. If you set the threshold to 100, the slightest difference in images will prevent image recognition. For example, an image with different width or height, because it is re-sized when played back by a tablet device, would not be selected if the threshold were set to 100, even if it is the same image. You can change the aspect ratio tolerance if a test fails on devices that do not have the same screen ratio, or if the images available in the application at playback are different from the ones available when the test is recorded.

HCL OneTest™ UI displays an image matching preview view when you set the recognition threshold in the test editor to help you find which images might be accurate to identify the target object when the test is played back. The best candidate images are green, images whose score is over the threshold are yellow, and are not the most appropriate, and images whose score is under the threshold are in red. These candidate images do not match with the reference images.

You can find the image correlation details in the test report that is displayed when test execution is complete.

For details, see Modifying a step target using an image as the main property.

RESPONSIVE DESIGN CONDITION

Some applications use Responsive Design, that is to say, the application behavior or graphic display adapts to the target device used. For example, you find more and more applications that are designed to change the format of their graphic elements according to the screen size or the screen orientation, or according to the version of the operating system used, and other such parameters.

Other applications require users to log in and provide their location. Still others play tutorials to explain how to use the application the first time the application is installed and run. After that, these tutorials are not shown. These situations can create test failures.

To fix these test failures issues, you can set execution conditions to a selection of variable actions. That way, a block of actions are executed the first time the test is run but are not executed the next time the actions in the test are run. This is an example of a Responsive Design condition. For details, see Creating Responsive Design conditions in a test. This feature is available for Android applications only in version 8.7.1.

ACTIONS PERFORMED ASYNCHRONOUSLY